What is Kubernetes?

Kubernetes is a "container-orchestration system" which was open-sourced by Google in 2014. In simple terms, it makes it easier for us to manage containers by automating various tasks.

Getting familiar with the Environment

If you are just starting with Kubernetes, start using the tools which are supported by the Kubernetes community or tools in the ecosystem to set up a Kubernetes cluster on a local machine.

Tools

Install Tools like:

Kubectl Kind Minikube Kubeadm Crictl Helm Kompose kui

Get started by using some of these tools.

Why use Kubernetes?

Kubernetes, often abbreviated as “K8s”, orchestrates containerized applications to run on a cluster of hosts. The K8s system automates the deployment and management of cloud-native applications using on-premises infrastructure or public cloud platforms.

Kubernetes provides an easy way to scale your application, compared to virtual machines. It keeps code operational and speeds up the delivery process. Kubernetes API allows automating a lot of resource management and provisioning tasks.

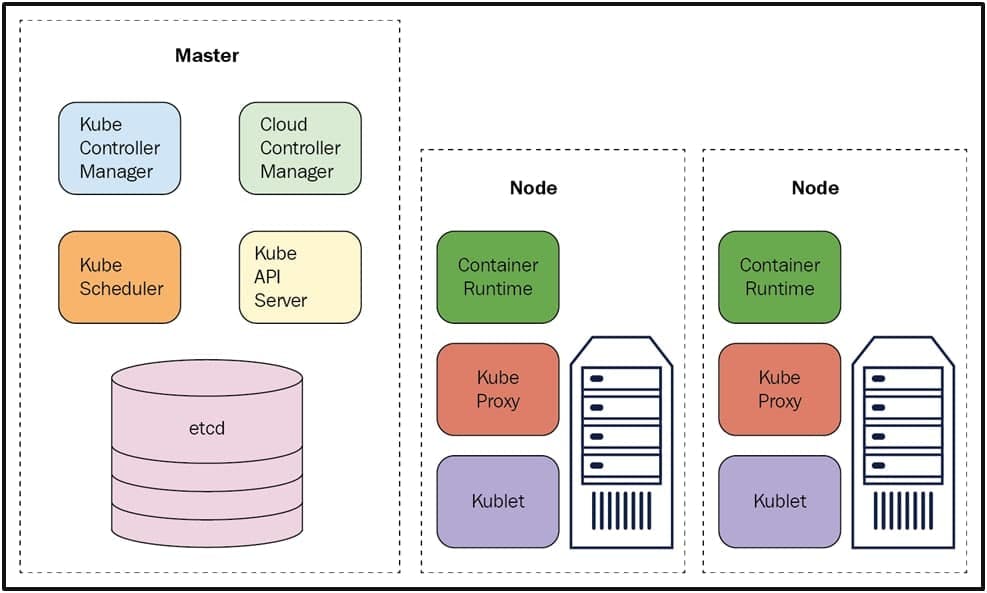

Kubernetes Architecture

The Kubernetes architecture provides a loosely coupled mechanism for service discovery across a cluster.

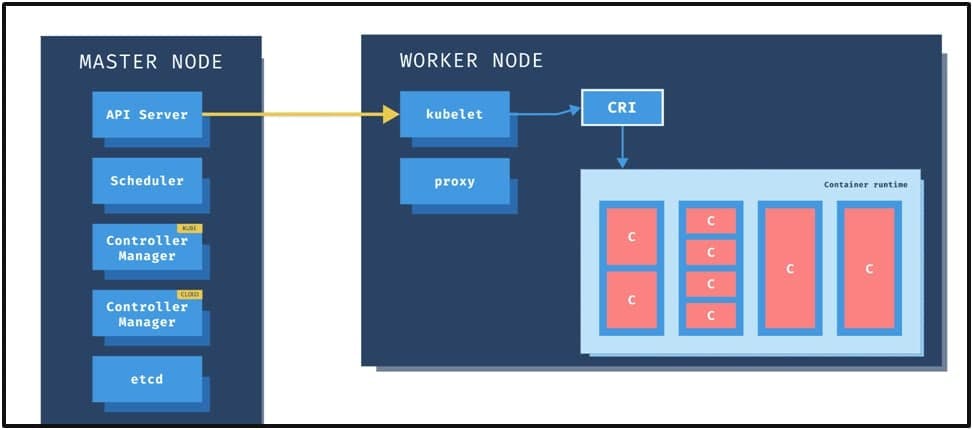

Kubernetes itself follows a client-server architecture, with a master node composed of etcd cluster, kube-apiserver, kube-controller-manager, cloud-controller-manager, scheduler. Client (worker) nodes are composed of kube-proxy and kubelet components.

A Kubernetes cluster is a form of Kubernetes deployment architecture. Basic Kubernetes architecture exists in two parts: the control plane and the nodes or computing machines. Each node could be either a physical or virtual machine and is its own Linux environment. Every node also runs pods, which are composed of containers. Kubernetes architecture components or K8s components include the Kubernetes control plane and the nodes in the cluster. The control plane machine components include the Kubernetes API server, Kubernetes scheduler, Kubernetes controller manager, etc. Kubernetes node components include a container runtime engine or docker, a Kubelet service, and a Kubernetes proxy service.

Different components of Master Node

The master server consists of various components including a Kube-apiserver, an, etcd storage, a Kube-controller-manager, a cloud-controller-manager, a Kube-scheduler, and a DNS server for Kubernetes services. Node components include kubelet and Kube-proxy on top of Docker.

Scheduler

It is responsible for physically scheduling Pods across multiple nodes. Depending upon the constraints mentioned in the configuration file, the scheduler schedules these Pods accordingly. For example, if you mention CPU has 1 core, memory is 10 GB, DiskType is SSD, etc. Once this artifact is passed to the API server, the scheduler will look for the appropriate nodes that meet these criteria & will schedule the Pods accordingly.

API server

Gatekeeper for the entire cluster. CRUD operations for servers go through the API. API server configures the API objects such as pods, services, replication controllers () and deployments. It exposes API for almost every operation. How to interact with this API? Using a tool called kubectl aka kubecontrol. It talks to the API server to perform any operations that we issue from cmd. In most cases, the master node does not contain containers. It just manages worker nodes, and also makes sure that the cluster of worker nodes are running healthy and successfully.

Control Manager

There are 4 controllers behind the control manager. Node Controller Replication Controller Endpoint Controller Service Accountant Token Controller: These controllers are responsible for the overall health of the entire cluster. It ensures that nodes are up and running all the time as well as the correct number of Pods are running as mentioned in the spec file.

etcd

Distributed key-value lightweight database. Central database to store current cluster state at any point of time. Any component of Kubernetes can query etcd to understand the state of the cluster so this is going to be the single source of truth for all the nodes, components, and the masters that are forming Kubernetes cluster.

Worker Node

The worker nodes are the part of the Kubernetes clusters which actually execute the containers and applications on them. They have two main components, the Kubelet Service and the Kube-proxy Service.

Components of Worker Node

Pods

A scheduling unit in Kubernetes. Like a virtual machine in the virtualization world. In the Kubernetes world, we have a Pod. Each Pod consists of one or more containers. There are scenarios where you need to run two or more dependent containers together within a pod where one container will be helping another container. With the help of Pods, we can deploy multiple dependent containers together. Pod acts as a Wrapper around these containers. We interact and manage containers through Pods.

Kubelet

Primary Node agent that runs on each worker node inside the cluster. The primary objective is that it looks at the pod spec that was submitted to the API server on the Kubernetes master and ensures that containers described in that pod spec are running and healthy. In case Kubelet notices any issues with the pods running on the worker nodes, it tries to restart the Pod on the same node. If the fault is with the worker node itself, then Kubernetes Master detects a Node failure and it decides to recreate the Pod on another healthy Node. This also depends on if the Pod is controlled by Replica set or Replication Controller (ensures that the specified number of pods are running at any time). If none of them are behind this Pod then Pod dies & cannot be recreated anywhere. So it is advised to use Pods as deployment or replica sets.

Kube-Proxy

Responsible for maintaining the entire network configuration. It maintains the distributed network across all the nodes, all the pods, and all containers. Also exposes services to the outside world. It is the core networking component inside Kubernetes. The Kube-proxy will feed its information about what pods are on this node to iptables. iptables is a firewall in Linux and can route traffic. So when a new pod is launched, Kube-proxy is going to change the iptable rules to make sure that this pod is routable within the cluster.

Containers

Containers are Runtime Environments for containerized applications. We run container applications inside the containers. These containers reside inside Pods. Containers are designed to run Micro-services.

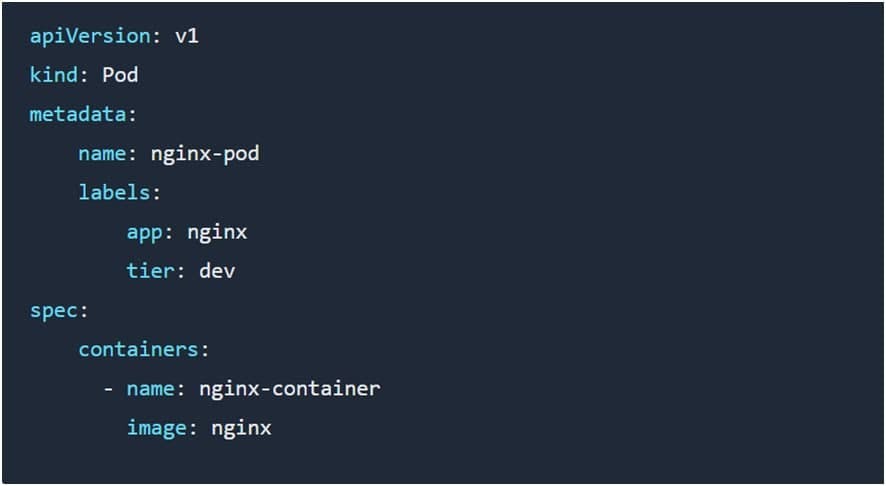

Pod Manifest file

We can define Kubernetes objects in 2 formats: YAML and JSON. Most of the Kubernetes objects consist of 4 top-level required fields :

apiVersion

It defines the version of the Kubernetes API you’re using to create this object. v1: It means that the Kubernetes object is part of the first stable release of the Kubernetes API. So it consists of core objects such as Pods, ReplicationController, and Service. apps/v1: Includes functionality related to running apps in Kubernetes. batch/v1: Consists of objects related to bash processes and jobs like tasks.

Pod Termination

Since Pods represent processes running on your cluster, Kubernetes provides for graceful termination when Pods are no longer needed. Kubernetes implements graceful termination by applying a default grace period of 30 seconds from the time that you issue a termination request. A typical Pod termination in Kubernetes involves the following steps:

You send a command or API call to terminate the Pod.

Kubernetes updates the Pod status to reflect the time after which the Pod is to be considered "dead" (the time of the termination request plus the grace period).

Kubernetes marks the Pod state as "Terminating" and stops sending traffic to the Pod.

Kubernetes send a TERM signal to the Pod, indicating that the Pod should shut down.

When the grace period expires, Kubernetes issues a SIGKILL to any processes still running in the Pod.

Kubernetes removes the Pod from the API server on the Kubernetes Master.

Note: The grace period is configurable; you can set your own grace period when interacting with the cluster to request termination, such as using the kubectl delete command.